Projects

Independent products built over the past six months using AI-native workflows — from PRD through production.

Quantum Threat Index

Risk intelligence dashboard tracking quantum computing threats across sectors with primary-source citations and transparent evidence tiering.

The Product

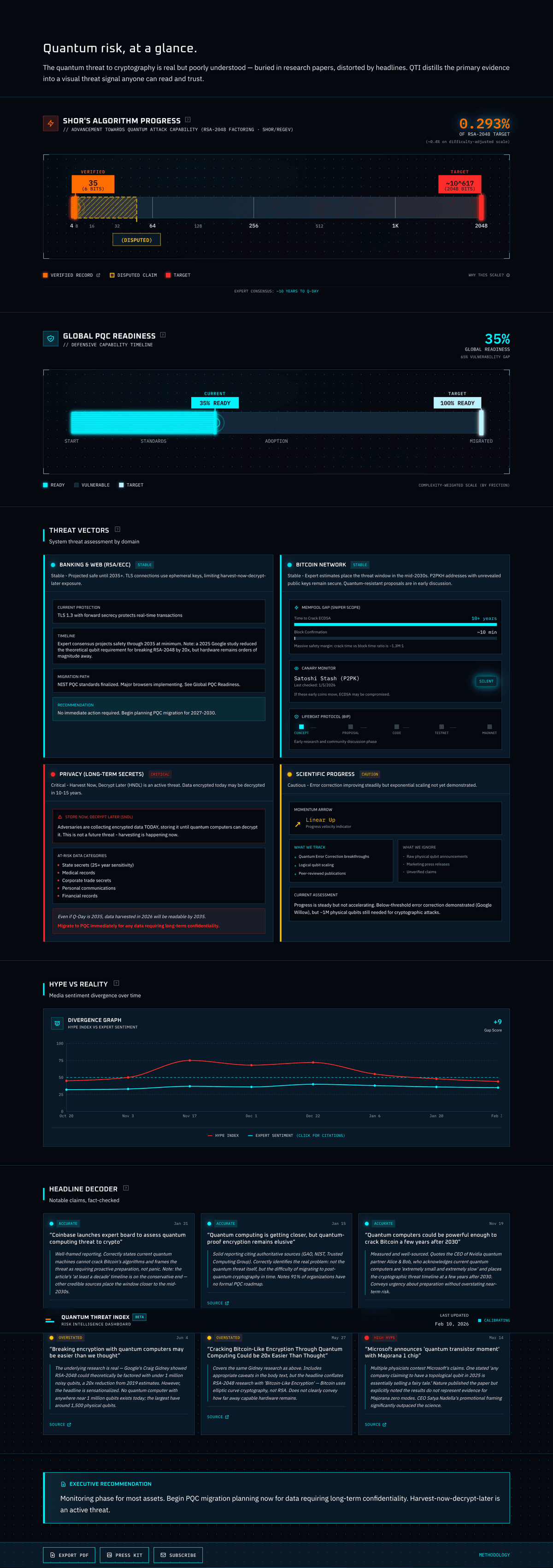

Quantum computing risk is simultaneously overhyped and underestimated. Mainstream headlines cycle between apocalyptic “Q-Day” predictions and dismissive hand-waving, while the most authoritative sources (peer-reviewed papers, NIST updates) publish annually or slower. For anyone making actual decisions about cryptographic risk, the gap between those two speeds is a real problem.

QTI is a risk intelligence dashboard that tracks where the quantum threat actually stands, sector by sector, with transparent citations and evidence tiering. Built end-to-end in roughly one month and deployed live, with AI agents as collaborators across every phase of the project.

Domain Research as Multi-Agent Orchestration

I’m a frontend/full-stack engineer, not a physicist or cryptographer. Building a credible product in this space required genuine domain understanding before writing any code or spec.

I ran parallel research sessions across multiple AI tools, each chosen for different strengths. Perplexity and Gemini Deep Research produced structured literature reviews covering Shor’s algorithm progress, PQC standardization timelines, and the “harvest now, decrypt later” threat model. ChatGPT handled interactive deep-dives when I needed to drill into specific concepts (error correction thresholds, logical vs. physical qubit distinctions). Claude provided synthesis and critical analysis of conflicting claims across sources.

This process surfaced several commonly repeated claims that turned out to be outdated or misleading, including inflated factoring records that conflated classical optimization with actual quantum computation. The research phase directly shaped product decisions: the evidence tiering system, the “Ghost Bar” governance rules for disputed claims, and the sector-specific threat model all emerged from patterns I found in how misinformation propagates in this space.

Design Decisions That Define the Dashboard

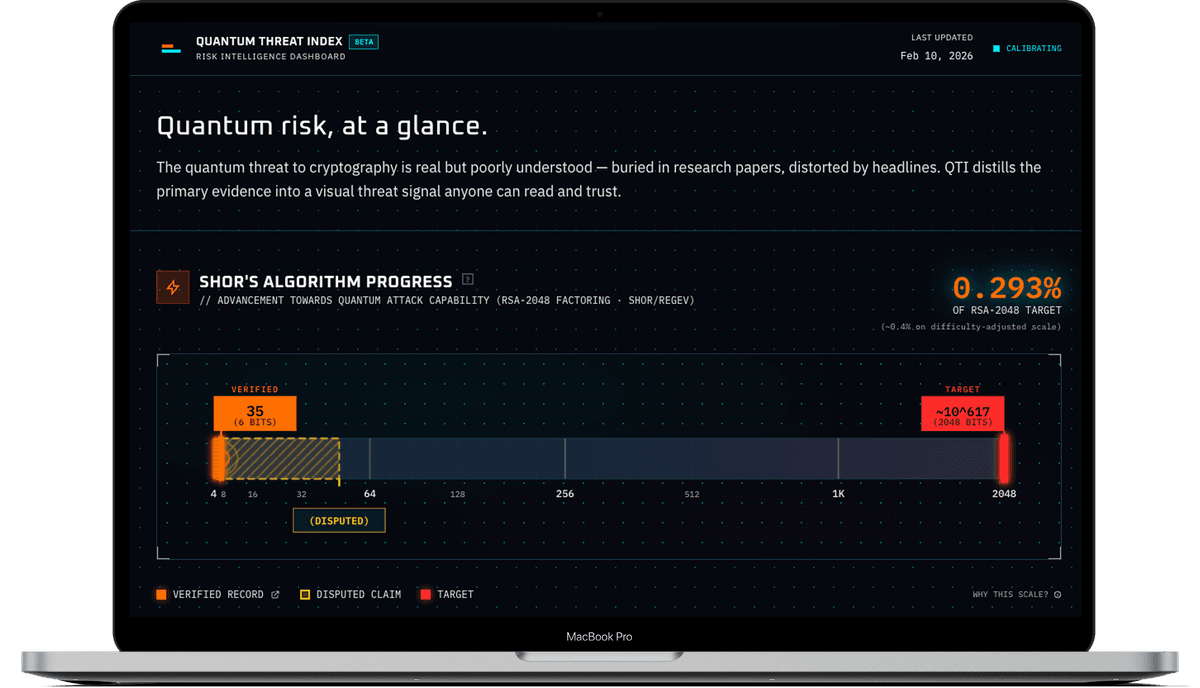

Logarithmic honesty in the Shor’s Bar. The hero visualization tracks progress toward breaking RSA-2048 encryption. Current verified record: factoring 35 (6 bits). Target: 2048 bits. A linear scale would show roughly 0.3% progress, but actual computational difficulty places it closer to 0.001%. I developed a hybrid logarithmic scale and validated the mathematical approach by cross-referencing multiple LLMs, since getting this wrong would undermine QTI’s core premise. A “Ghost Bar” overlays disputed claims alongside the verified record, with links to expert rebuttals.

Evidence tiering enforced at the data layer. Every data point is classified as Primary (peer-reviewed papers, standards body announcements), Secondary (expert commentary from a curated whitelist), or Context (mainstream headlines, press releases). This classification constrains what can justify different types of dashboard updates, and became foundational when the project pivoted to include an automated newsroom engine.

One interface, four reading depths. The dashboard serves journalists, portfolio managers, CISOs, and anxious laypeople through the same page. Rather than hiding complexity behind clicks, the layout uses visual hierarchy to create natural reading layers—traffic-light status indicators scannable from a projector, with forensic detail (mempool vulnerability windows, Satoshi-address canary monitors, PQC migration tracking) visible on scroll without interaction gates. An early progressive-disclosure prototype revealed that click-to-expand modules undermined the “glanceable dashboard” promise, so we shifted to a density-over-disclosure model.

Implementation: Directing AI Through Structured Specs

My role during implementation was architect and product director. I wrote structured specifications with clear constraints, then delegated implementation to Claude Code agents, reviewing output for architectural consistency and product alignment rather than line-by-line code review.

The workflow followed an artifact system I developed for AI-native product builds:

- PRODUCT.md defined what to build: features, personas, scope boundaries

- BUILD-STRATEGY.md captured why each technical decision was made

- CONVENTIONS.md evolved during implementation as patterns emerged, with each session’s learnings compounding into the next

Each document served as a contract between me and the AI agents working in the codebase. Claude Code’s builder agent received focused specs and narrow context for each task. A code-reviewer agent evaluated changes against established conventions in an isolated context window, catching issues the builder wouldn’t find in its own work. A doc-verifier agent flagged documentation drift. This multi-agent pipeline evolved and refined throughout QTI’s development, with quality gates enforced through convention-based checks rather than manual checklists.

Custom SVG visualizations, the multi-format export pipeline (PNG/SVG/PDF via react-pdf and html-to-image), four complete visual themes, and the full dashboard component library were all built through this spec-driven, directed-agent workflow.

The Newsroom Pivot

After shipping the dashboard, I hit a sustainability problem. The visualizations worked. The data model was sound. But keeping everything current required weekly manual monitoring of arXiv, NIST announcements, expert blogs, and mainstream headlines.

The realization: collection and synthesis could be automated while preserving human judgment at the publish gate. This shifted QTI from a manually curated dashboard to a product with an AI-native newsroom engine at its core.

The newsroom runs weekly via GitHub Actions, ingesting from 10 configured sources (arXiv, IETF Datatracker, NIST, RFC Editor, expert blogs like Shtetl-Optimized, and browser/OS adoption feeds). It normalizes items into a common schema, clusters related items into storylines anchored on primary evidence, generates structured proposals with citations and confidence levels, and opens a GitHub PR. A human editor reviews and merges. Nothing auto-publishes.

What Shipped

Designed, built, and deployed in approximately one month as sole developer. Live at quantumthreatindex.com.

Dashboard:

- Shor’s Bar: custom SVG with hybrid log scale and Ghost Bar for disputed claims

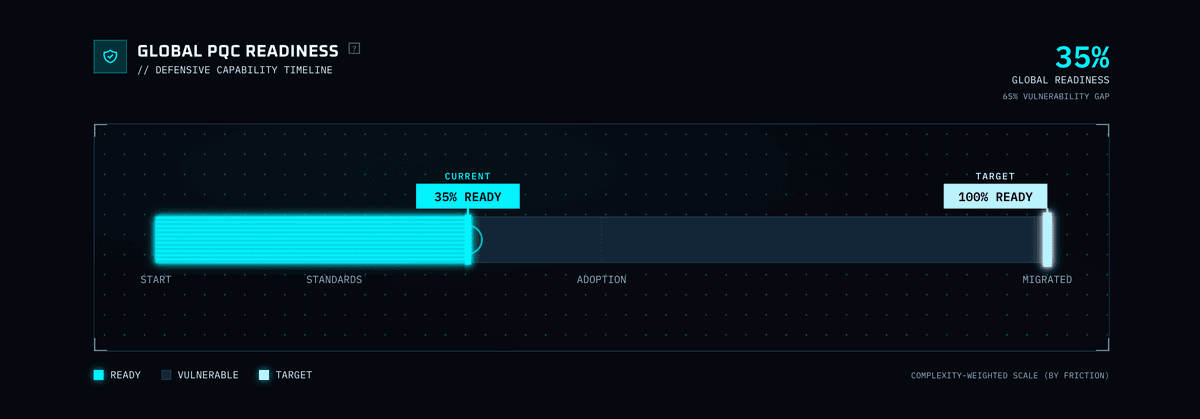

- PQC Readiness pipeline tracker (NIST standards → browser/OS support → enterprise migration)

- Four threat modules with typed deep-dive panels (Banking, Bitcoin, Privacy, Scientific Progress)

- Divergence Graph: Hype vs. Expert Sentiment time-series with citation modals

- Headline Decoder with traffic-light verdicts

- Board Mode one-page PDF export and Press Kit (PNG/SVG multi-format)

- Four complete visual themes

Newsroom Engine:

- 10-source ingestion pipeline with Zod-validated schemas

- Evidence-tiered proposal generation with anti-hype guardrails

- Anchor-first clustering requiring primary-source evidence for every storyline

- GitHub Actions workflow: weekly schedule plus manual emergency dispatch

Stack: Next.js 16, TypeScript, Tailwind CSS 4, Recharts, react-pdf, Zod, Vercel